If you are going to play with chatGPT API like me, you may use an API call like this:

async function getChatGPTResponse(prompt) {

try {

const response = await axios.post(CHATGPT_URL, {

model: "gpt-4",

messages: [{ role: "user", content: prompt }],

max_tokens: 100, }, {

headers: { 'Authorization': `Bearer ${OPENAI_API_KEY}`, 'Content-Type': 'application/json' }

});

return response.data.choices[0].message.content.trim();

} catch (error) {

console.error('ChatGPT error:', error.response?.data || error.message);

return "Error with ChatGPT.";

}

}

Pay attention to the “model” variable! Depending on where you copied this code snipet from, just trial out the API can cost you much more than expected. In my experience, I used the “gpt-4” model in the beginning for testing my AI-debate webapp:

https://shengzhou.nl/tech/aiplayground/debate/

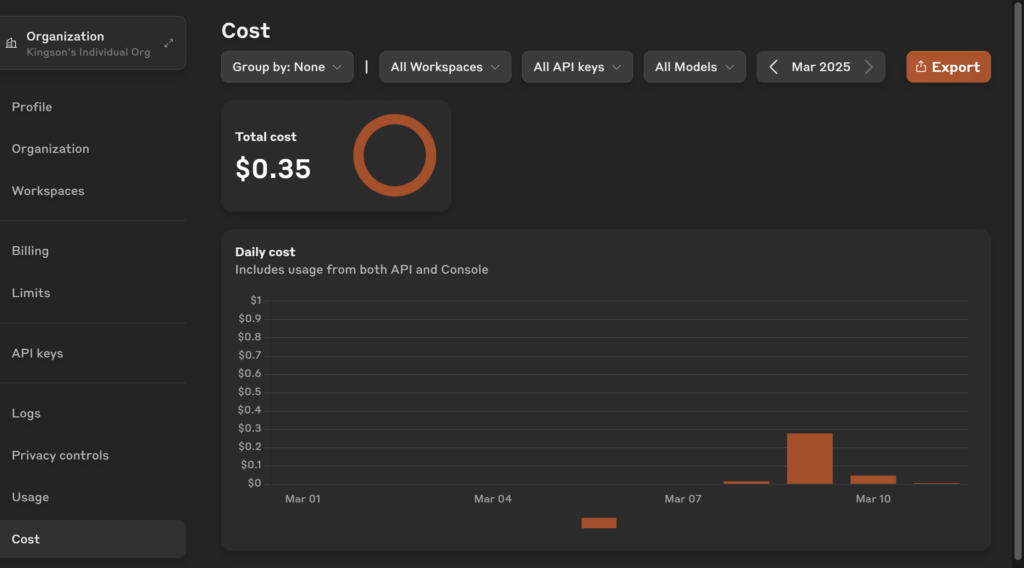

The price difference between ChatGPT API and Claude API is quite a bit even when I am only testing the app.

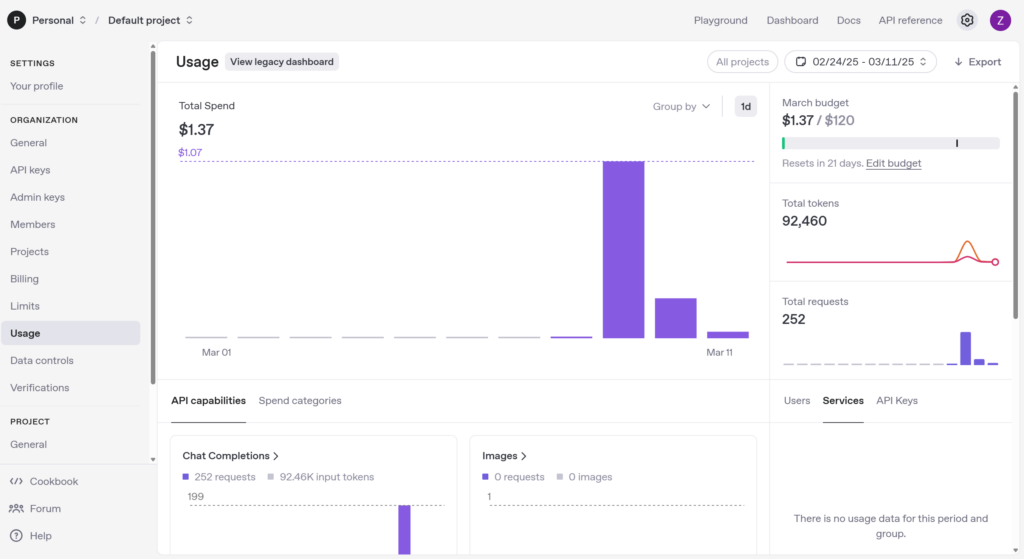

ChatGPT API cost:

Calude API cost:

I just find out the reason is I am using a very costy model “gpt-4” in my ChatGPT API call. To be honest, I was not paying attention, and I have no knowledge about the different models and pricings from openAI. But you don’t need to make the same mistake, just go to this page to choose a model that suits your budget and set the model variable to it in your ChatGPT API call:

https://platform.openai.com/docs/pricing

In the time of my writing, the “gpt-4o-mini” model is the cheapest. The default model “gpt-4” that I used is 200 times more expensive than “gpt-4o-mini”! I just set it in my API call, and did some test. It seems the reasoning ability is not distinguishable, only the response takes a bit longer than when I was using “gpt-4” model.